Overview

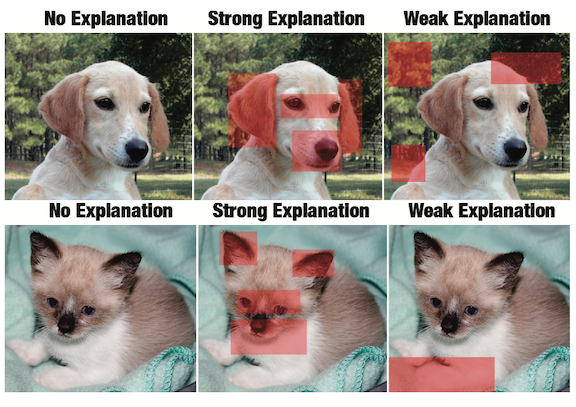

Machine learning and AI can aid human decision-making, but trust and comprehension are key factors. Researchers have explored explainable interfaces to clarify system outputs. However, the impact of meaningful vs. meaningless explanations, especially for non-experts, remains unclear. We conducted a controlled image classification experiment with local explanations. Results reveal that the human meaningfulness of explanations significantly affects accuracy perception. Weak, less human-meaningful explanations led to underestimation of system accuracy. This underscores the importance of human-understandable rationale in user judgments of intelligent systems.