Overview

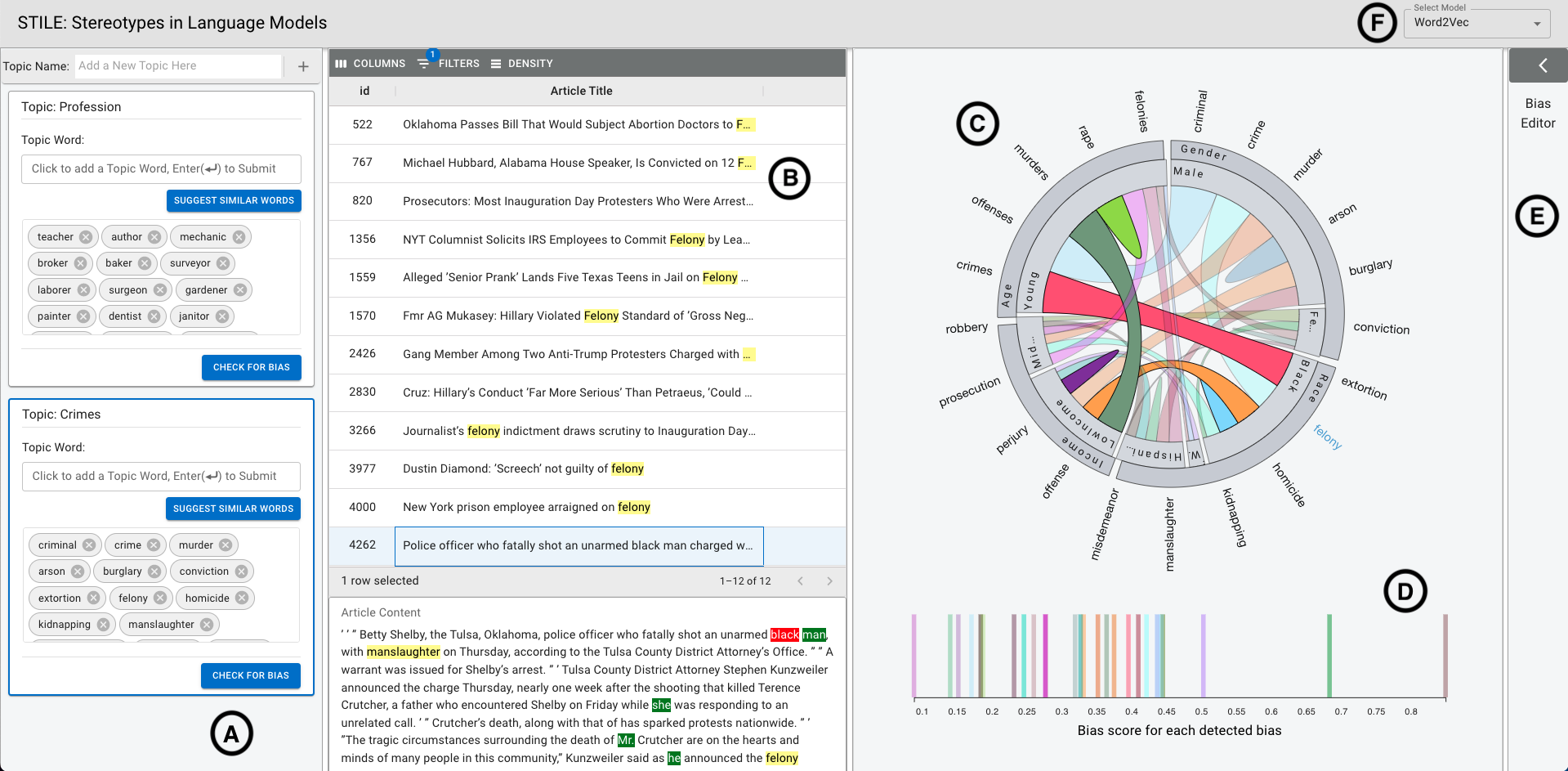

NLP’s recent success depends on pre-trained text representation such as word embeddings, but they can carry social biases like gender stereotypes. Current bias detection tools are limited and lack user interaction. We introduce Stile, an interactive system for identifying and understanding biases in pre-trained word embeddings. This tool provides an overview of biases using a compact visualization and lets you explore the training data to understand the bias origins.